Guys im a beginner who were interested to explore many things and do. I know the code snipets are too long sorry for that . I think full code give you clear understanding.so i tried a mediasoup involved project like google meet. I’m stuck on this for 1 months i dont have any solution. Also i reconstructed my code like below . Before the code was different at that time i got the same issue with abortError " [intercept-console-error.ts:46 Playback error: AbortError: The play() request was interrupted by a call to pause()]" while the stream come and try to autoplay because of autoplay restriction i think so. I added a manual play button for that then also showing the same error . So i changed the entire code and tried the new code below now im getting black screen and not showing abortError

This is the worker creation code .

const totalThreads = os.cpus().length;

export const createWorkers = (): Promise<mediasoupTypes.Worker[]> =>

new Promise(async (resolve, reject) => {

let workers: mediasoupTypes.Worker[] = [];

try {

for (let i = 0; i < totalThreads; i++) {

const worker: mediasoupTypes.Worker = await mediasoup.createWorker({

rtcMinPort: config.workerSettings.rtcMinPort,

rtcMaxPort: config.workerSettings.rtcMaxPort,

logLevel: config.workerSettings.logLevel,

logTags: config.workerSettings.logTags,

});

worker.on("died", () => {

console.log("Worker has died");

process.exit(1);

});

workers.push(worker);

}

resolve(workers);

} catch (error) {

reject(error);

}

});

// Mediasoup Events In the backend

socket.on("getRtpCapabilities", (data, callback) => {

try {

const { streamId } = data;

const router = this.routers[streamId];

if (router) {

callback({ rtpCapabilities: router.rtpCapabilities });

} else {

callback({ error: "Router not found" });

}

} catch (error) {

console.error("Error getting RTP capabilities:", error);

callback({ error: "Failed to get RTP capabilities" });

}

});

socket.on("createWebRtcTransport", async (data, callback) => {

try {

const { streamId, direction } = data; // 'send' or 'recv'

if (!streamId) {

callback({ error: "Stream ID is required" });

return;

}

const router = this.routers[streamId];

if (!router) {

callback({ error: "Router not found" });

return;

}

if (

!this.participants[streamId] ||

!this.participants[streamId][socket.id]

) {

callback({ error: "Participant not found" });

return;

}

const participant = this.participants[streamId][socket.id];

const transport = await router.createWebRtcTransport({

listenIps: [{ ip: "0.0.0.0" }],

enableUdp: true,

enableTcp: true,

preferUdp: true,

});

if (direction === "send") {

participant.sendTransport = transport;

} else {

participant.receiveTransport = transport;

}

callback({

id: transport.id,

iceParameters: transport.iceParameters,

iceCandidates: transport.iceCandidates,

dtlsParameters: transport.dtlsParameters,

});

} catch (error) {

console.error("Error creating WebRtcTransport:", error);

callback({ error: "Failed to create transport" });

}

});

socket.on("connectTransport", async (data, callback) => {

try {

const { streamId, transportId, dtlsParameters } = data;

if (

!streamId ||

!this.participants[streamId] ||

!this.participants[streamId][socket.id]

) {

callback({ error: "Participant not found" });

return;

}

const participant = this.participants[streamId][socket.id];

const transport =

participant.sendTransport?.id === transportId

? participant.sendTransport

: participant.receiveTransport;

if (!transport) {

callback({ error: "Transport not found" });

return;

}

await transport.connect({ dtlsParameters });

callback({ success: true });

} catch (error) {

console.error("Error connecting transport:", error);

callback({ error: "Failed to connect transport" });

}

});

socket.on("produce", async (data, callback) => {

try {

const { streamId, kind, rtpParameters, appData } = data;

if (

!streamId ||

!this.participants[streamId] ||

!this.participants[streamId][socket.id]

) {

callback({ error: "Participant not found" });

return;

}

const participant = this.participants[streamId][socket.id];

if (!participant.sendTransport) {

callback({ error: "Send transport not found" });

return;

}

const producer = await participant.sendTransport.produce({

kind,

rtpParameters,

appData, // e.g., { source: "webcam" | "mic" | "screen" }

});

participant.producers.push(producer);

// Add to stream producers list for new participants

if (!this.streamProducers[streamId]) {

this.streamProducers[streamId] = [];

}

this.streamProducers[streamId].push({

id: producer.id,

kind,

userId: participant.userId,

appData,

});

// Notify all participants about the new producer

this.io.to(streamId).emit("newProducer", {

producerId: producer.id,

userId: participant.userId,

kind,

appData,

});

callback({ id: producer.id });

// Listen for producer close events

producer.on("transportclose", () => {

console.log(`Producer ${producer.id} transport closed`);

this.removeProducerFromStream(streamId, producer.id);

});

producer.on("@close", () => {

console.log(`Producer ${producer.id} closed`);

this.removeProducerFromStream(streamId, producer.id);

});

} catch (error) {

console.error("Error producing:", error);

callback({ error: "Failed to produce" });

}

});

socket.on("pauseProducer", async (data, callback) => {

try {

const { producerId } = data;

const streamId = this.socketToStream[socket.id];

if (

!streamId ||

!this.participants[streamId] ||

!this.participants[streamId][socket.id]

) {

callback({ error: "Participant not found" });

return;

}

const participant = this.participants[streamId][socket.id];

const producer = participant.producers.find(

(p) => p.id === producerId

);

if (!producer) {

callback({ error: "Producer not found" });

return;

}

await producer.pause();

// Notify other participants with appData

this.io.to(streamId).emit("producerPaused", {

producerId,

userId: participant.userId,

appData: producer.appData,

});

callback({ success: true });

} catch (error) {

console.error("Error pausing producer:", error);

callback({ error: "Failed to pause producer" });

}

});

// Resume producer

socket.on("resumeProducer", async (data, callback) => {

try {

const { producerId } = data;

const streamId = this.socketToStream[socket.id];

if (

!streamId ||

!this.participants[streamId] ||

!this.participants[streamId][socket.id]

) {

callback({ error: "Participant not found" });

return;

}

const participant = this.participants[streamId][socket.id];

const producer = participant.producers.find(

(p) => p.id === producerId

);

if (!producer) {

callback({ error: "Producer not found" });

return;

}

await producer.resume();

// Notify other participants with appData

this.io.to(streamId).emit("producerResumed", {

producerId,

userId: participant.userId,

appData: producer.appData,

});

callback({ success: true });

} catch (error) {

console.error("Error resuming producer:", error);

callback({ error: "Failed to resume producer" });

}

});

socket.on("consume", async (data, callback) => {

try {

const { producerId, rtpCapabilities } = data;

const streamId = this.socketToStream[socket.id];

if (!streamId) {

callback({ error: "Stream ID is required" });

return;

}

console.log(this.participants, "participants in consume");

const router = this.routers[streamId];

if (!router) {

callback({ error: "Router not found" });

return;

}

console.log(

streamId,

"streamId in consume",

socket.id,

"socketId in consume"

);

if (

!this.participants[streamId] ||

!this.participants[streamId][socket.id]

) {

console.log(

"participants in consume if condition",

this.participants

);

console.log("socketId in consume if condition", socket.id);

callback({ error: "Participant not found" });

return;

}

const participant = this.participants[streamId][socket.id];

if (!participant.receiveTransport) {

callback({ error: "Receive transport not found" });

return;

}

console.log("finding producer object");

// Find the producer by ID

let producerObject: mediasoupTypes.Producer | undefined;

for (const p of Object.values(this.participants[streamId])) {

producerObject = p.producers.find((prod) => prod.id === producerId);

if (producerObject) break;

}

if (!producerObject) {

callback({ error: "Producer not found" });

return;

}

// Check if router can consume

if (!router.canConsume({ producerId, rtpCapabilities })) {

callback({

error: "Router cannot consume with given RTP capabilities",

});

return;

}

const consumer = await participant.receiveTransport.consume({

producerId,

rtpCapabilities,

paused: true, // Start paused, will resume after getting response

});

participant.consumers.push(consumer);

console.log(participant.consumers, "consumers in consume");

// Handle consumer events

consumer.on("transportclose", () => {

console.log(`Consumer ${consumer.id} transport closed`);

});

consumer.on("producerclose", () => {

console.log(`Consumer ${consumer.id} producer closed`);

const index = participant.consumers.findIndex(

(c) => c.id === consumer.id

);

if (index !== -1) {

participant.consumers.splice(index, 1);

}

// Notify the client that producer was closed

socket.emit("consumerClosed", {

consumerId: consumer.id,

reason: "producer closed",

});

});

console.log(

"sending to the frontend consumer data",

consumer.id,

producerId,

consumer.kind,

consumer.rtpParameters,

streamId

);

callback({

id: consumer.id,

producerId,

kind: consumer.kind,

rtpParameters: consumer.rtpParameters,

});

} catch (error) {

console.error("Error consuming:", error);

callback({ error: "Failed to consume" });

}

});

socket.on("resumeConsumer", async (data, callback) => {

try {

const { consumerId } = data;

const streamId = this.socketToStream[socket.id];

if (!streamId) {

callback({ error: "You are not connected to any stream" });

return;

}

if (

!this.participants[streamId] ||

!this.participants[streamId][socket.id]

) {

callback({ error: "Participant not found" });

return;

}

const participant = this.participants[streamId][socket.id];

const consumer = participant.consumers.find(

(c) => c.id === consumerId

);

if (!consumer) {

callback({ error: "Consumer not found" });

return;

}

await consumer.resume();

callback({ success: true });

} catch (error) {

console.error("Error resuming consumer:", error);

callback({ error: "Failed to resume consumer" });

}

});

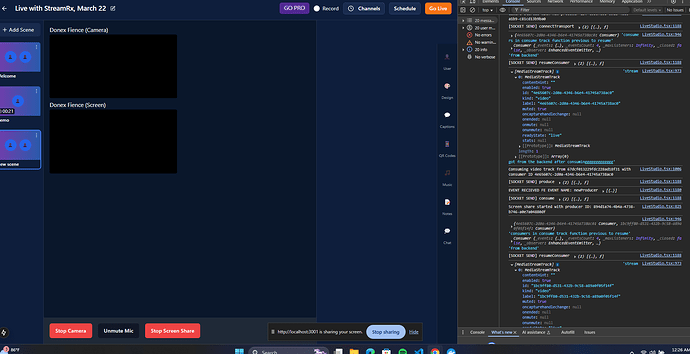

So the issue im facing is im getting the consumed Track as muted . because of that in every cilent the stream showing as a black screen . i noticed that consumed stream is muted:true for this 3 type of streams. Im trying that on the same system by taking two tabs(inco+ normal) for testing . and local pariticipant stream also im showing by consuming i know that is not good but for teting without taking another participant for consuming i made like this .I m stuck on this for 1 month is anybody know this please kindly share your thoughts on this . I shared my full code snippet of this .I’m using next js and node js for my this setup . i attached a image of console for your reference .